Blog

December 9, 2025

Jim WagnerWhat Every Clinical Operations Leader Should Know about AI Going into 2026

Essay 1 of 3: AI Planning for 2026

If you’re a clinical operations leader heading into 2026, you’re probably asking some version of the same questions: How real is this AI moment? Where should I actually focus? How do I prepare my team without adding to their burden — or mine?

You’re watching the hype. You’re hearing the vendor pitches. And you’re trying to separate what matters from what doesn’t, while still doing your day job.

It’s not easy for me, and we’ve been building AI-based companies for two decades. We’ve watched hype cycles come and go. We’ve seen what delivers and what disappoints.

Generative AI has humbled me. This is different.

Not because of the hype — there’s plenty of that. But because of the speed at which it is evolving. Every 90 days we are seeing substantial increases in the depth and breadth of AI capabilities, including the kind of work that defines clinical operations: document-heavy, coordination-heavy, administratively crushing work that burns out good people and frustrates everyone involved.

We don’t have all the answers. Nobody does — this is moving too fast. But I do have dedicated time on this, reading, doing myself, building our product and our company, and most importantly helping multiple institutions work through AI adoption at once. This gives me pattern recognition we can share.

This three-part essay series is my attempt to do that: a practical look at what clinical operations leaders should know about AI today, and how to think about it going into 2026.

- Essay 1 (this piece): The December 2025 level-set — what’s actually happening with AI and how to prepare yourself and your team.

- Essay 2: Prioritizing the work — why administrative work is ground zero, and what workflow transformation actually looks like.

- Essay 3: Rethinking ourselves and our organizations — how roles evolve, what governance requires, and where the technology is heading next.

Let’s start with where things actually stand.

Five Things Every Clinical Ops Leader Should Know

If you take one thing from this section, make it this: the constraint on AI in clinical research is not technical. It’s organizational and human.

Here are five truths that should shape your 2026 planning.

Truth 1: AI Usage Is Mainstream

Across industries, AI adoption is no longer experimental. The vast majority of enterprise leaders use generative AI weekly. Nearly half use it daily. Budgets are increasing. And three-quarters of organizations already report positive ROI.

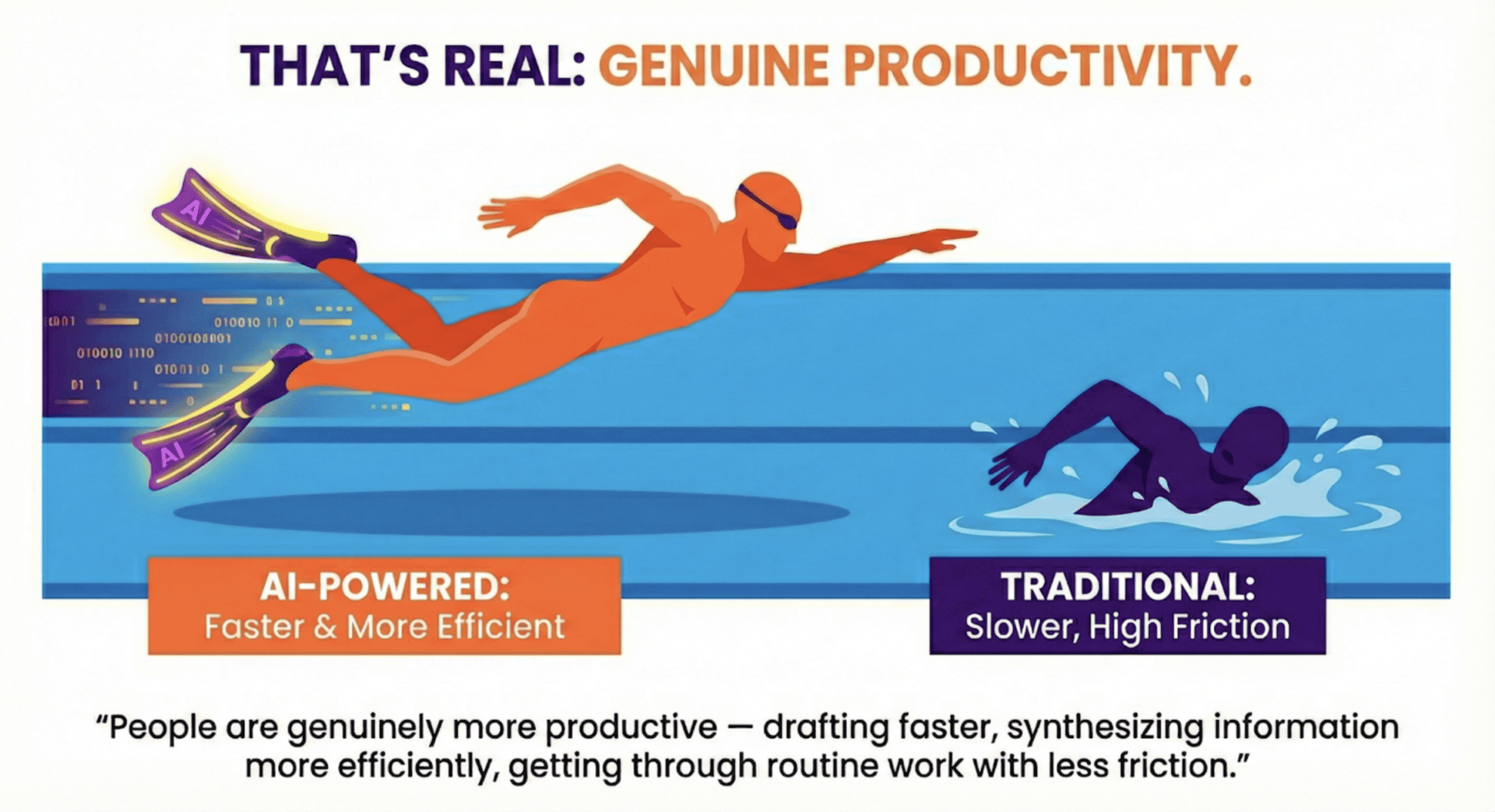

That’s real. People are genuinely more productive — drafting faster, synthesizing information more efficiently, getting through routine work with less friction.

But there’s a gap between individual productivity and organizational impact. Only about 6% of organizations report meaningful top or bottom-line transformation — the kind that shows up in financial results.

The difference isn’t access. Everyone has access to the same tools.

The difference is approach. High performers are nearly three times more likely to have fundamentally redesigned workflows, not just added AI to existing processes. They’ve defined where AI acts, where humans validate, and how exceptions are handled.

The good news: the path is clear. Organizations that redesign workflows — rather than just sprinkling AI on top — are seeing results.

Buying AI tools is relatively easy. Managing how the work gets done is hard — and it’s the differentiator.

Truth 2: Clinical Research Has an AI Gap

Our industry agrees AI matters. We’re just not fast.

About 85% of sponsors, providers, and sites believe AI will significantly impact clinical research in the next five years. But only about 20% of sponsors and providers report active adoption. Sites lag further — only 9% indicate active adoption.

Meanwhile, study start-up remains a top challenge for roughly a third of sites. Contracts and budgets where I work remains the leading cause of startup delays, cited by 73% of sites. And technology is a low-rated category in satisfaction — coordinators point to too many systems, poor integration, and weak support.

Almost everyone believes AI will matter. Our collective challenge is to make that belief a reality and to support your people to navigate that complexity.

Truth 3: The Biggest Opportunity Is in the Work Your Team Dislikes Most

AI disruption isn’t limited to coders and tech roles.

MIT’s Project Iceberg offers one of the clearest pictures of where AI will actually hit the workforce.

The visible AI disruption — what MIT calls the “Surface Index” — is concentrated in obvious tech roles: software development, data science. That represents about 2.2% of U.S. labor market wage value.

But beneath the surface sits a much larger mass. The “Iceberg Index” — specifically hidden or lower profile administrative work — represents roughly 12% of wage value, about $1.2 trillion. Five times larger than the visible disruption.

That’s not in Silicon Valley. It’s in Rochester, Minnesota, it’s in Nashville, Tennessee and it’s in Tampa, Florida. Document review, data reconciliation, routine financial calculations, coordination across systems. Administrative work, distributed everywhere.

That’s clinical operations.

Truth 4: The Bottleneck Is Human Capability

While AI capability has accelerated, human capability hasn’t kept pace.

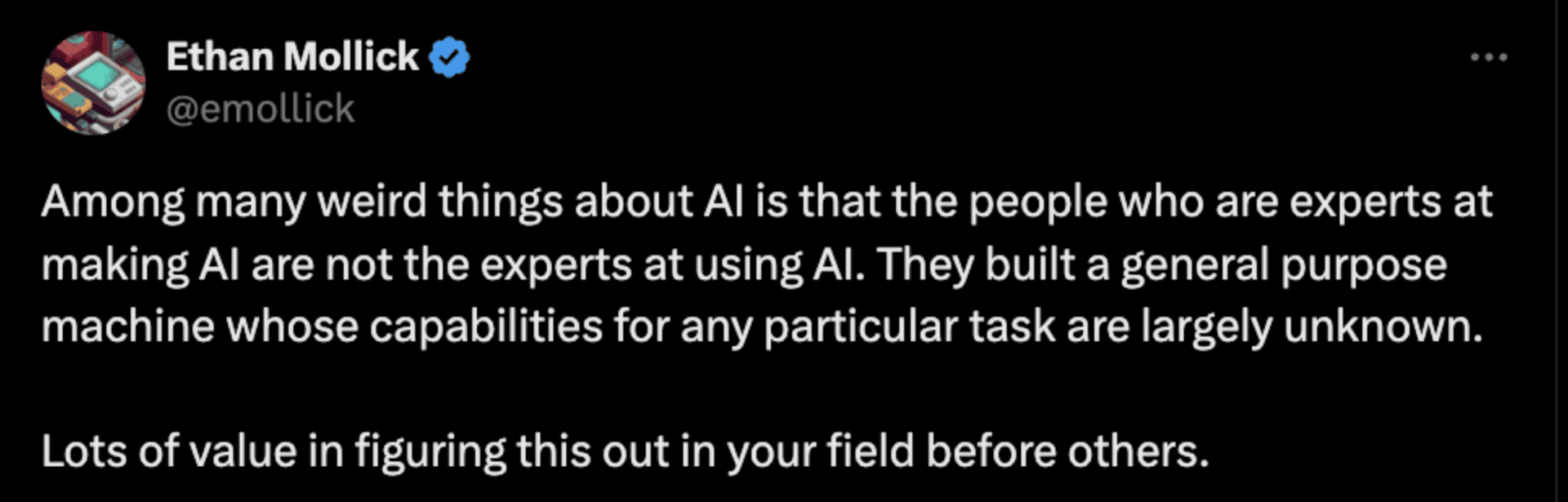

Ethan Mollick, a Wharton professor and AI expert, highlights a key paradox of the current AI landscape.

AI fluency has become one of the fastest-growing skill requirements — not building AI, but using and overseeing it. Yet only about 27% of clinical research sites report adequate training for staff. Training budgets have been flat or declining even as AI investment rises.

You can’t buy your way out of this with technology contracts. You can’t outsource it to IT. You’ll either build AI fluency in yourself and your team, or find yourself with powerful tools that don’t deliver value or safety.

Truth 5: AI Is Moving from “Assist” to Something More

Today, most AI is assistive: AI drafts, humans review and send. The human is in every loop to start the process and to finish it.

That’s changing. AI systems are becoming capable of monitoring workflows, executing routine tasks, and escalating only exceptions. Humans are moving from doing the work to designing and overseeing the system that does the work.

Humans are moving from doing the work to designing and overseeing the system that does the work.

AI performance relative to “expert humans” (average 14 years of experience)

We’ll go deeper on this shift in Essays 2 and 3. For now, what matters is this: part of your and your team’s job will evolve from “How do I get this work done?” to “How do I design, oversee, and improve a system where people and AI work together?”

Preparing for that starts with building your own fluency.

Preparing Yourself and Your Team for 2026

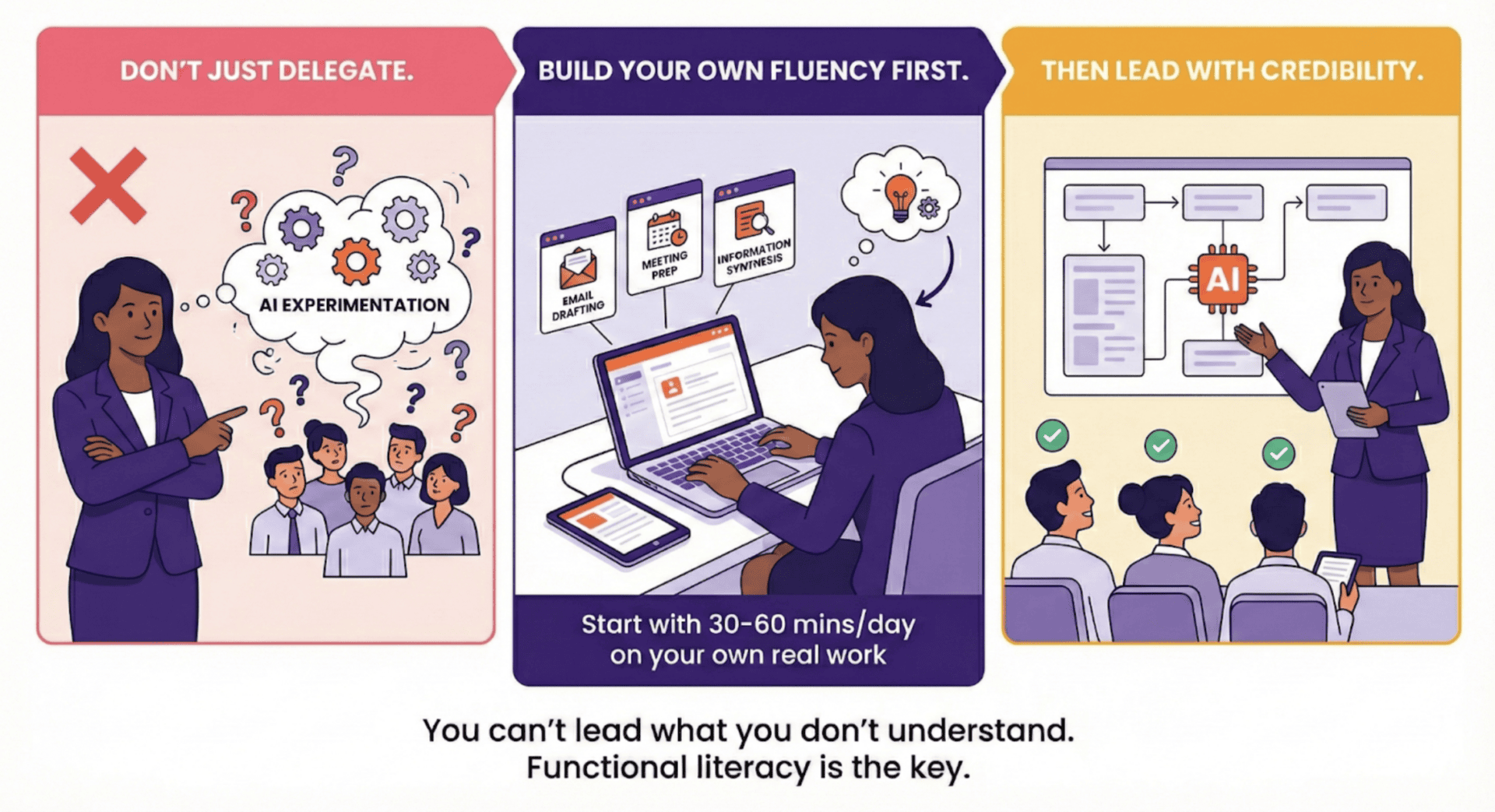

You don’t need to become an AI expert. But you need enough fluency to make good decisions, enough experience to have credibility, and enough structure to help your team use AI safely.

Build Your Own Fluency First

It’s a bit of a truism, but in this case I believe it’s an imperative: simply stated, you can’t lead what you don’t understand. And this doesn’t mean that you have to be a data scientist (I’m sure not).

The kind of AI fluency that I’m referencing isn’t about coding or model architecture. It’s functional literacy — enough to use AI responsibly, evaluate claims, and lead change with credibility.

What fluency looks like:

- You can frame good questions — knowing what AI handles well and where you must provide judgment.

- You can evaluate outputs — seeing what’s correct, what’s missing, where AI has overstepped.

- You can challenge vendors — asking what AI actually does, how errors are handled, where humans stay in the loop.

- You can coach your team — helping them pick tasks, interpret outputs, share what they’re learning.

Why your fluency comes first:

You could delegate AI experimentation to a task force. You shouldn’t. By that, I don’t mean you shouldn’t have task forces or be making structured investments of your team’s time. I just mean that our job as leaders goes beyond building task forces. It includes building our own skills.

When you build fluency yourself, you make better buying decisions. You gain credibility with your team. You improve colleague and counterparty conversations. You develop pattern recognition for where AI helps and where it creates work.

How to build fluency without adding a second job:

This is the fun and the rewarding part. Building AI fluency starts with using AI for work you’re already doing.

- Prepare for your next call. Start by prepping on your own. Then, give AI the materials you’ve just read to prepare for the meeting. Ask the AI for a critical analysis and for talking points. Compare the AI insights to what you’d have prepared yourself. Notice where it helps and where it misses context.

- Draft a routine communication. Let AI produce a first draft of a site update or training email. Edit it. Time how long it takes versus starting from scratch.

- Synthesize information you know well. Feed AI your meeting notes or coordinator feedback. Ask for themes and risks. Because you know the material, you can judge accuracy.

Thirty to sixty minutes per day of intentional use will build real fluency, and deliver real value, over a few months.

Extend Fluency to Your Team

Once you’ve started, extend it systematically.

- Set expectations in specific workflows. “Use AI more” isn’t helpful. Instead: “For query response drafting, try AI for first drafts.” Name the workflows. Be specific about what’s expected and what’s off-limits.

- Make experimentation safe. Your team will use AI poorly at first. Create space for sharing failures, asking questions, and admitting “it made a mess” without judgment.

- Designate AI leads. One or two curious team members who go deeper, try more use cases, and help colleagues troubleshoot. Internal coaches, not prompt engineers.

- Build feedback loops. Weekly or monthly, thirty minutes to an hour: what’s working, what’s not, what’s next. Build a shared playbook through lived experience. Note, I have a standing session with our engineers every week, not to talk about our product, but about how we are using AI internally to accelerate and improve our development processes. The payoff from this time can be measured in three ways: 1) consistency – best practices are shared and now we have more consistent usage of AI tools and processes by our entire team, 2) results – we are developing new features and solving problems faster than ever, and 3) mindset – increasingly, there is a built-in assumption that whatever job has to be done, it will be done with AI by our sides.

- Reflect it in expectations. As AI becomes part of how work gets done, include it in job descriptions and performance conversations. This will have an impact.

The Institutional Reality

Here’s what is more difficult to control: individual fluency is the relatively easy part. You can build personal and team fluency on your own. You can’t transform the organization alone.

Real transformation — redesigning workflows, deploying AI at scale — requires institutional alignment. Governance frameworks. Leadership buy-in. Cross-functional commitment.

Real transformation — redesigning workflows, deploying AI at scale — requires institutional alignment. Governance frameworks. Leadership buy-in. Cross-functional commitment.

Many institutions have AI policies, security requirements, and approved tools. Work within them. Partner with compliance and IT. Share what you’re learning.

If your institution doesn’t have those policies yet, your fluency positions you to shape them.

Essay 3 will address building institutional foundations. For now, focus on what you control: your capability and your team’s. When you’re ready to make the case for broader change, you’ll have credibility because you’ve done the work yourself.

Identify the Right Work for AI

Not all work is equally ready for AI. The discipline is matching AI capability to your highest-pain workflows.

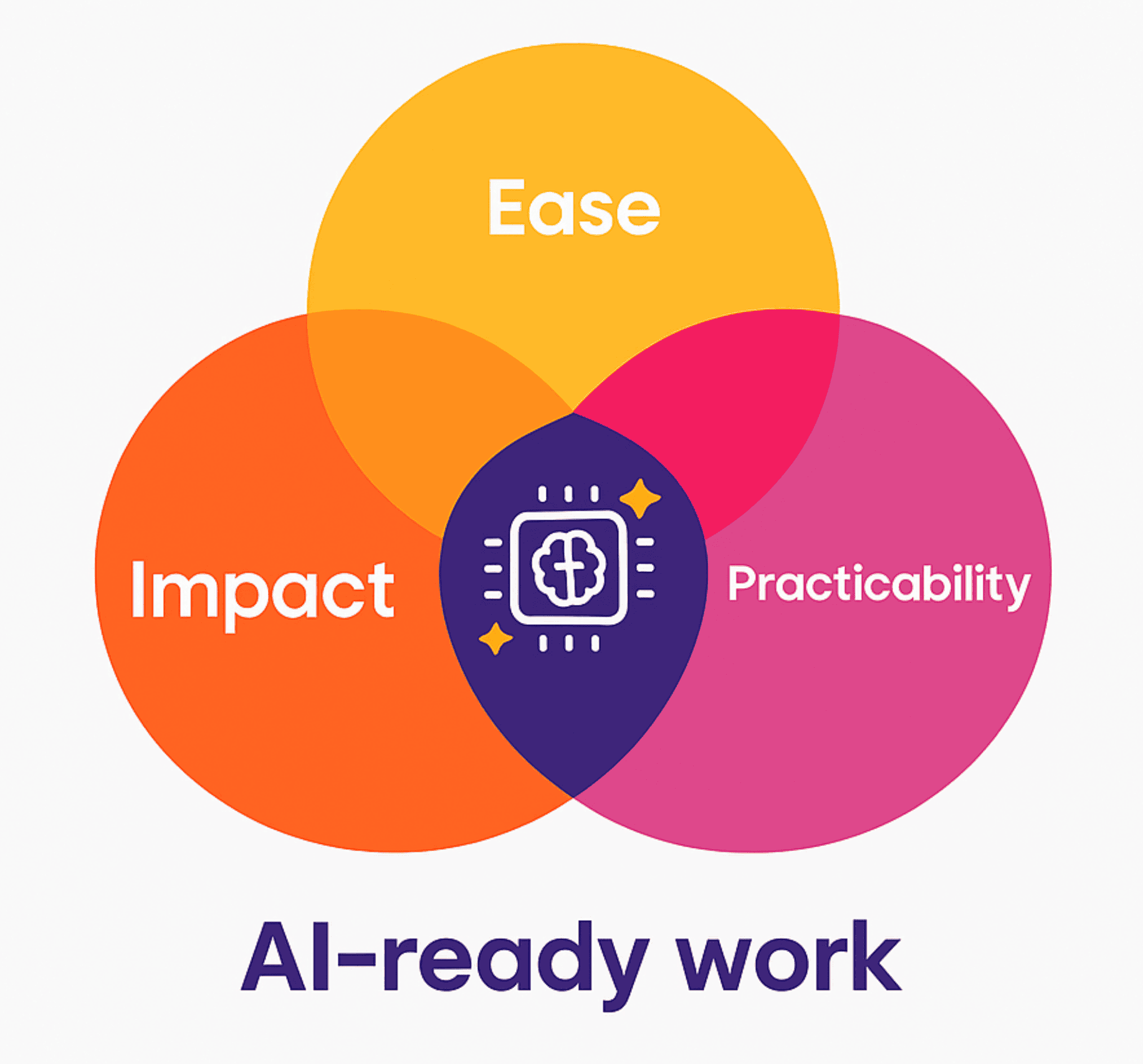

The prioritization lens:

- Impact: How painful is this today? Time, delays, rework, frustration.

- Ease: How ready for AI? Documented processes beat tribal knowledge. Structured inputs beat unstructured. Clear patterns beat pure judgment.

- Practicability: Can you actually change this? Authority, constraints, tooling.

Where to look first:

Selfishly (and objectively), it’s easy enough for me to say that contracts and budgets are particularly compelling use cases for AI — they’re the leading cause of startup delays, the work is structured, and your team already dislikes it. But there is no shortage of places for your team to look – query triage, regulatory documents, protocol summaries, recruitment pre-screening and many other places are also strong candidates.

How to map a workflow:

List the steps. Highlight the waiting — where work sits in queues. Highlight the repetition — what looks the same every time. Highlight the judgment — where experience and relationships matter.

To start, I would suggest you consider this useful rule: about 60% of most administrative workflows follow patterns; 40% require genuine judgment. Your goal for 2026 should be for AI to handle the 60% while your team focuses on the 40%.

The buying discipline:

Beware false choices. Waiting for your CTMS or other vendor to add AI could mean waiting an uncomfortable amount of time. But what’s likely worse is letting internal IT build something custom for you — projects that rarely scale and are nearly guaranteed to be swallowed by technical debt and ultimately replaced.

The right question: Does this reduce my team’s burden, integrate with how we work, and improve the workflows that matter most?

Essay 2 will go deeper on workflow redesign. Here, the goal is simpler: pick one or two priorities, experiment on real work, build muscle.

What’s Next

This first essay covered the 2026 landscape and how to prepare yourself and your team — fluency, experimentation, and prioritization.

Essay 2 zooms out to the work itself: why administrative work is ground zero, what real workflow redesign looks like, and how to reduce rather than increase your technology burden.

Essay 3 zooms out further to the organization: how roles evolve, what governance requires, and where AI is heading next.

We don’t have all the answers. But we have pattern recognition from watching this unfold across multiple organizations, and I’m happy to share what I’m seeing.

We’re figuring this out together. Let’s keep going.

Jim Wagner is CEO of The Contract Network, where AI is used responsibly under enterprise-grade security controls to help research sites and sponsors optimize clinical trial agreements and budgets. TCN’s AI implementation follows CHAI principles, maintains SOC 2 Type II compliance, and prohibits model training on customer data.