Blog

December 22, 2025

Jim WagnerRethinking Ourselves, Our Organizations, and the Move to “Human Above the Loop”

Essay 3 of 3: AI Planning for 2026

This is the third essay in a three-part series on what clinical operations leaders should know about AI going into 2026. Essay 1 covered building personal and team fluency — getting yourself and your people ready. Essay 2 addressed targeting administrative work for transformation — applying AI where the pressure is highest and the risk is lowest. This essay looks further ahead: how roles evolve, what governance requires, and how to prepare for AI that doesn’t just assist with tasks but executes entire jobs.

The Next Step

In Essay 2, we described a world where AI assists — it drafts, you review, you send. That model works. It’s delivering value right now.

But it’s not the end of the story.

For certain workflows — those that are high-volume, administratively burdensome, and where errors are recoverable — organizations are beginning to develop greater risk tolerance. And with greater risk tolerance comes significantly greater reward: AI that doesn’t just help with tasks, but executes entire jobs.

This is the shift from “Human in the Loop” (you touch every step) to “Human Above the Loop” (you design the system, set the boundaries, and validate at key checkpoints).

The defining challenge of 2026 isn’t “how do I use this tool?” It is “how do I prepare my organization for workflows where AI plans and executes, and humans oversee?”

The stakes are significant. McKinsey research shows 75–85% of life sciences workflows contain tasks that could move in this direction. Meanwhile, 62% of organizations are already experimenting with agents — but only 23% are scaling even one. High curiosity, low maturity. The gap is the opportunity.

The defining challenge of 2026 isn’t “how do I use this tool?” It is “how do I prepare my organization for workflows where AI plans and executes, and humans oversee?”

From Tasks to Jobs

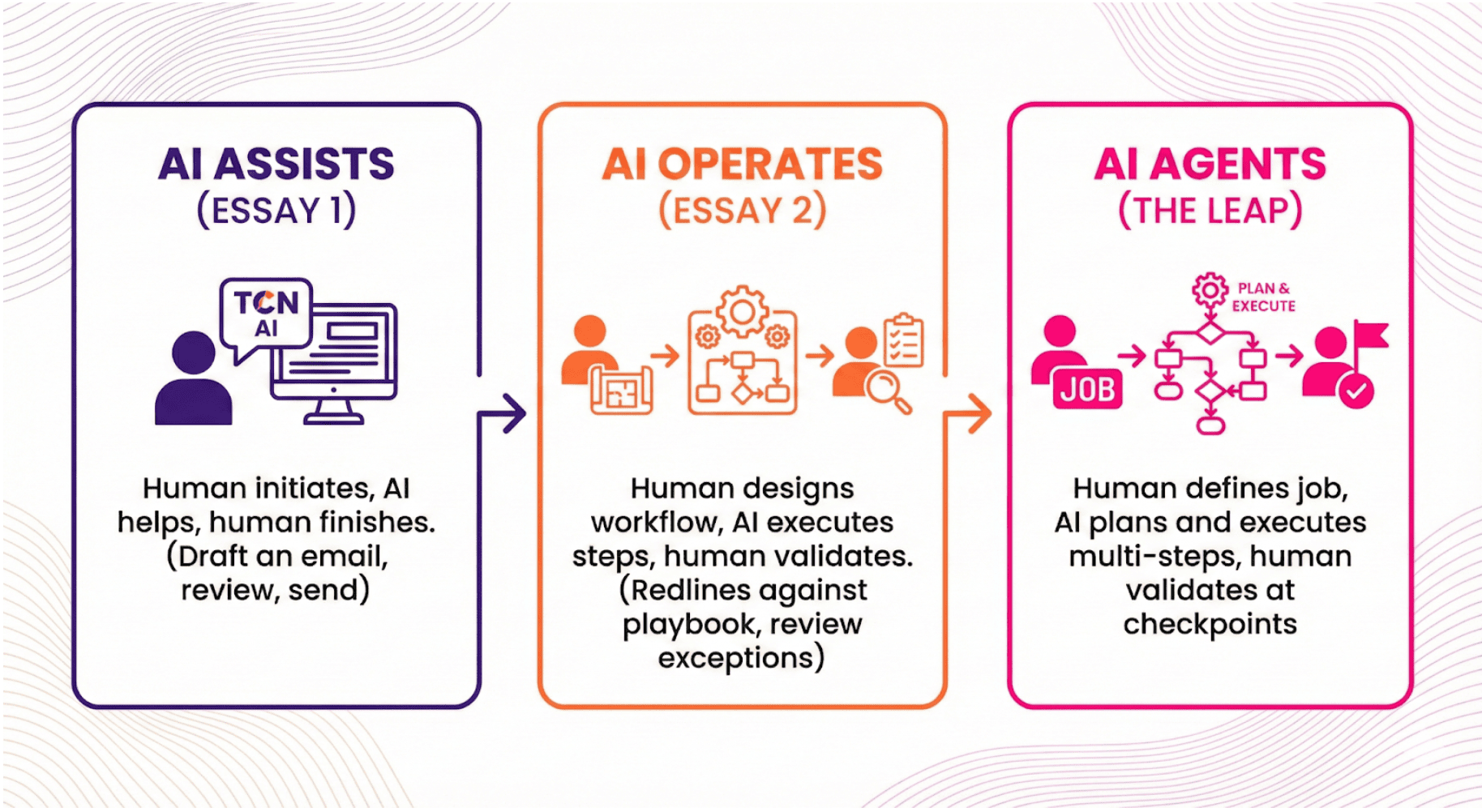

To understand where we’re going, we need to distinguish between three modes of work.

AI Assists (Essay 1 territory): Human initiates, AI helps, human finishes. You ask AI to draft an email; you review and send.

AI Operates (Essay 2 territory): Human designs the workflow, AI executes defined steps, human validates output. AI generates contract redlines against your playbook; you review exceptions.

AI Agents (The Leap): Human defines the job to be done, AI plans and executes multi-step processes36, human validates at appropriate checkpoints.

What “Agentic” Actually Looks Like

Most people imagine agentic AI as a “set it and forget it” magic button. That’s not the reality for 2026.

It is not: “AI, negotiate this contract and only call me if there’s a problem.”

Rather, it is still more routine tasks with fewer high-risk judgments: “We’re starting a new study with 30 sites. Execute the agreement issuance process.”

The AI then works through a sequence of decisions and actions:

- Determines whether a CDA is in place that covers the new study

- If not, evaluates whether an existing CDA can be cloned and updated

- Runs a parallel process for the CTA — selecting the appropriate template, drafting, and preparing for issuance

- Presents the work at defined checkpoints for human review before distribution

Early agentic workflows won’t be fully autonomous. AI plans and executes most steps; humans approve at checkpoints. Over time, as trust builds and governance matures, those checkpoints move further apart. But the starting point is AI doing the work between checkpoints — not AI doing everything while you sleep.

This distinction matters. The hype suggests full autonomy. The reality is graduated trust. Organizations that understand this will adopt sooner and more successfully than those waiting for the magic button.

Why This Matters

The volume of administrative work means “human-in-every-step” doesn’t scale. If every agreement, every query, every budget reconciliation requires human initiation and human execution and human closure, you’re capped by headcount. Agentic models break that constraint — selectively, for the right workflows, with appropriate oversight.

The Glass Box

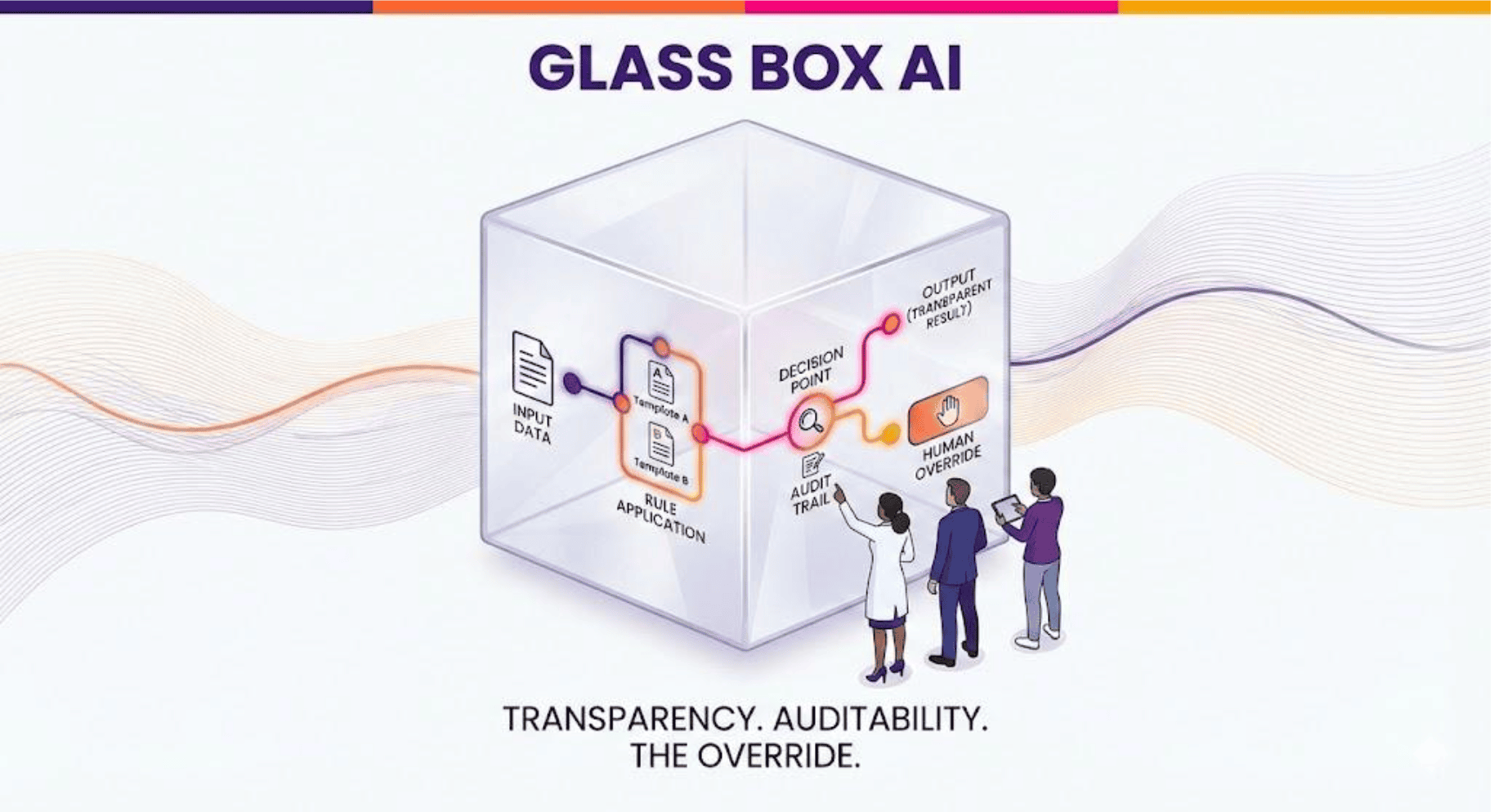

The problem with handing a “job” to an agent is visibility. You cannot trust what you cannot see.

Most organizations treat AI as a black box — inputs go in, outputs come out, trust is assumed. That’s acceptable when AI drafts an email you’re going to read anyway. It’s not acceptable when AI executes a twenty-step process across thirty sites.

Responsible leaders must demand “Glass Box” AI — systems where the reasoning is visible, not just the result.

To move above the loop, three things must be visible at every checkpoint:

Transparency. What data did the agent see? What rule did it apply? Why did it choose Template A over Template B? The answer can’t be “the model decided.” The answer needs to be traceable.

Auditability. A clear trail of each decision point. If the agent cloned an existing CDA, why that one? If it flagged a clause for human review, what triggered the flag? Six months from now, you need to reconstruct the path.

The Override. The ability to intervene at any checkpoint, correct the course, and continue — or revert to manual control entirely. The system must assume humans will sometimes need to step in.

The Regulatory Reality

Clinical research operates in a regulated environment. That’s not going to change. Agentic AI doesn’t shift accountability — sponsors, sites, and investigators remain responsible. But it changes how you demonstrate control.

Regulators won’t ask whether you used AI. They’ll ask whether you maintained control while using AI. The Glass Box is how you answer that question.

Interoperability is plumbing. Transparency is the control room. Build the control room first.

From Managers and Doers to Architects

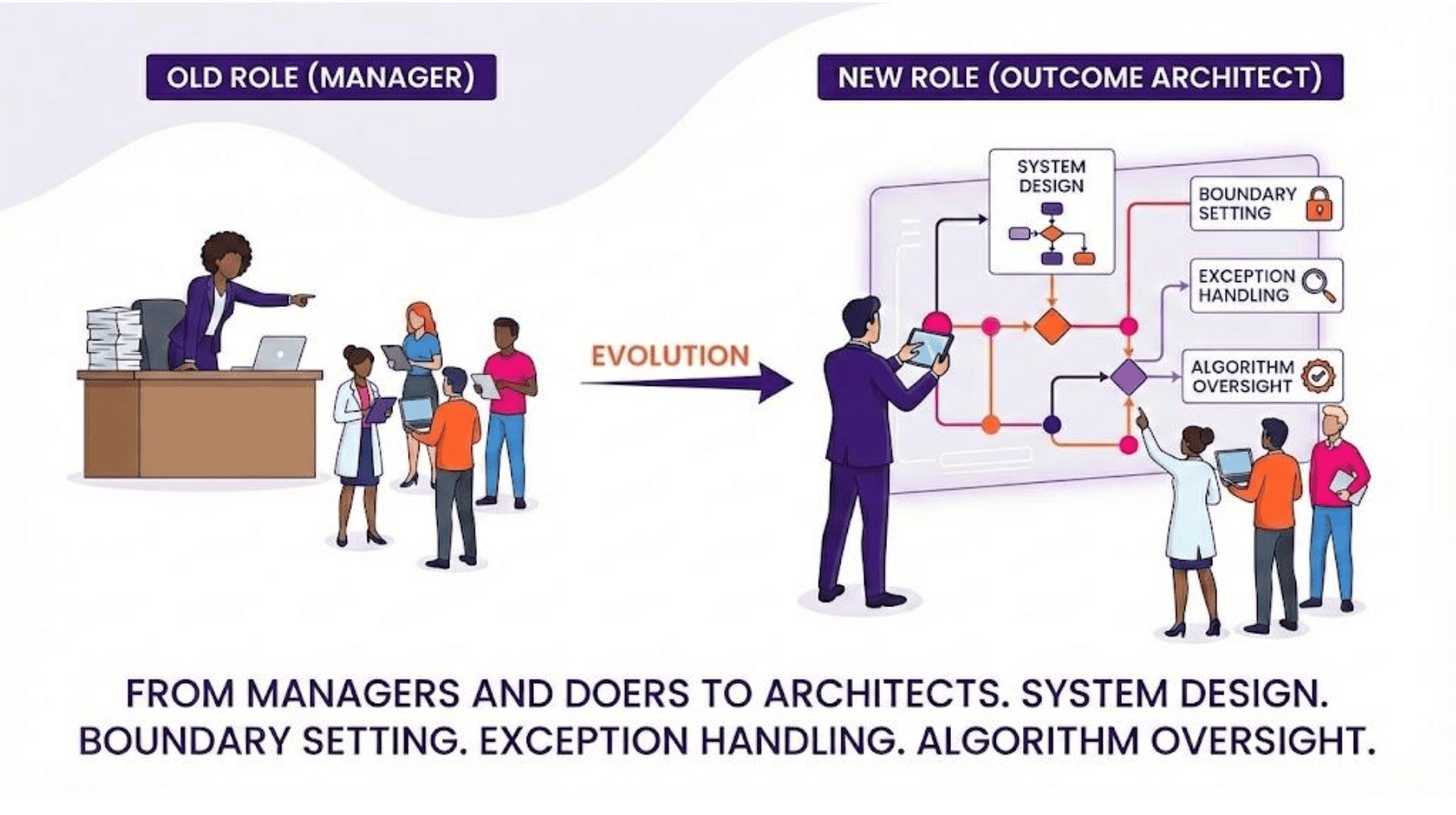

If AI is doing the work, what are we doing?

This is the question beneath the surface of every conversation about agentic AI. It’s the question your team is asking, even if they haven’t said it out loud. It deserves a direct answer.

You’re moving from Managers and leaders of “doers” to Outcome Architect (defining jobs, designing workflows, setting boundaries, validating results).

This isn’t a demotion. It’s an elevation. But it requires different skills than most of us were trained for.

New Competencies

- System Design: How do I structure a workflow so an agent can navigate it? What are the decision points? What inputs does the agent need at each stage? This requires making explicit what has always been implicit — the logic, the rules, the exceptions.

- Boundary Setting: What can the agent decide? What requires human judgment? Not abstract principles — specific, workflow-level rules. “The agent can select from approved templates but cannot modify indemnification language.” These boundaries are your governance in action.

- Exception Handling: When the agent escalates, can I diagnose why? Can I resolve efficiently and feed that learning back into the system? The human above the loop isn’t just approving outputs — they’re improving the system over time.

- Algorithm Oversight: Just as you’re responsible for patient safety and data integrity today, you’re responsible for the quality and reliability of AI-driven processes tomorrow.

The Capability Gap

Here’s the challenge: 45% of clinical research sites feel unprepared for this shift. Most professionals in our industry weren’t trained for system design and oversight. They were trained to do the work — and they’re good at it.

Meanwhile, demand for AI fluency has grown 7x in two years. The gap between what organizations need and what their people know how to do is real.

But this is a skill-building challenge, not a headcount challenge. The same people who are excellent at doing the work can become excellent at designing the systems that do the work — if they’re given the opportunity and support to make the transition.

The same people who are excellent at doing the work can become excellent at designing the systems that do the work — if they’re given the opportunity and support to make the transition.

What This Means for Roles

For coordinators and CRAs, the shift means less time on routine processing, more time on complex cases, sponsor relationships, and quality oversight. The repetitive work compresses. The interesting work expands. The job becomes more interesting, not eliminated.

For managers, the shift means less time reviewing routine work, more time designing workflows, setting policies, and coaching teams on exception handling.

For leadership, accountability shifts from “did my team do the work right” to “did I design a system that works and catches failures?” This is a higher level of responsibility — and it requires a different kind of engagement with how work actually gets done.

The 2026 Roadmap

You cannot deploy agentic workflows tomorrow if your foundation isn’t solid today. 2026 is the year of pre-investment.

Phase 1: The Transparency Audit (Q1-Q2 2026)

Goal: Learn to see the work.

Audit your heavy-admin workflows — study start-up, site activation, query resolution, agreement processing. Not to optimize them yet, but to document them.

The key question at each step: “If an agent executed this process, how would I verify each decision point?”

This forces you to make explicit what’s currently implicit. Why do we use this template for this type of site? What triggers an escalation to legal review? How do we know a budget is “at market”?

Most organizations discover they can’t answer these questions clearly. The logic lives in people’s heads. Institutional memory. Gut feel. You cannot design an agentic workflow on gut feel. Document the logic first.

Phase 2: The Lighthouse Job (Q3-Q4 2026)

Goal: Prove agentic capability on one complete job to be done.

Pick a single workflow — high-volume, text-heavy, non-patient-facing, recoverable errors. Agreement issuance for study start-up is a strong candidate.

Don’t just use AI to draft individual documents. Design a multi-step workflow where AI plans the process, executes each step, and presents work at defined checkpoints.

Practice “Human Above the Loop” for real. Human reviews the agent’s plan and outputs at Checkpoint 1. Approves, adjusts, or flags exceptions. Agent incorporates feedback and continues to Checkpoint 2. This is how you build organizational muscle for a new way of working.

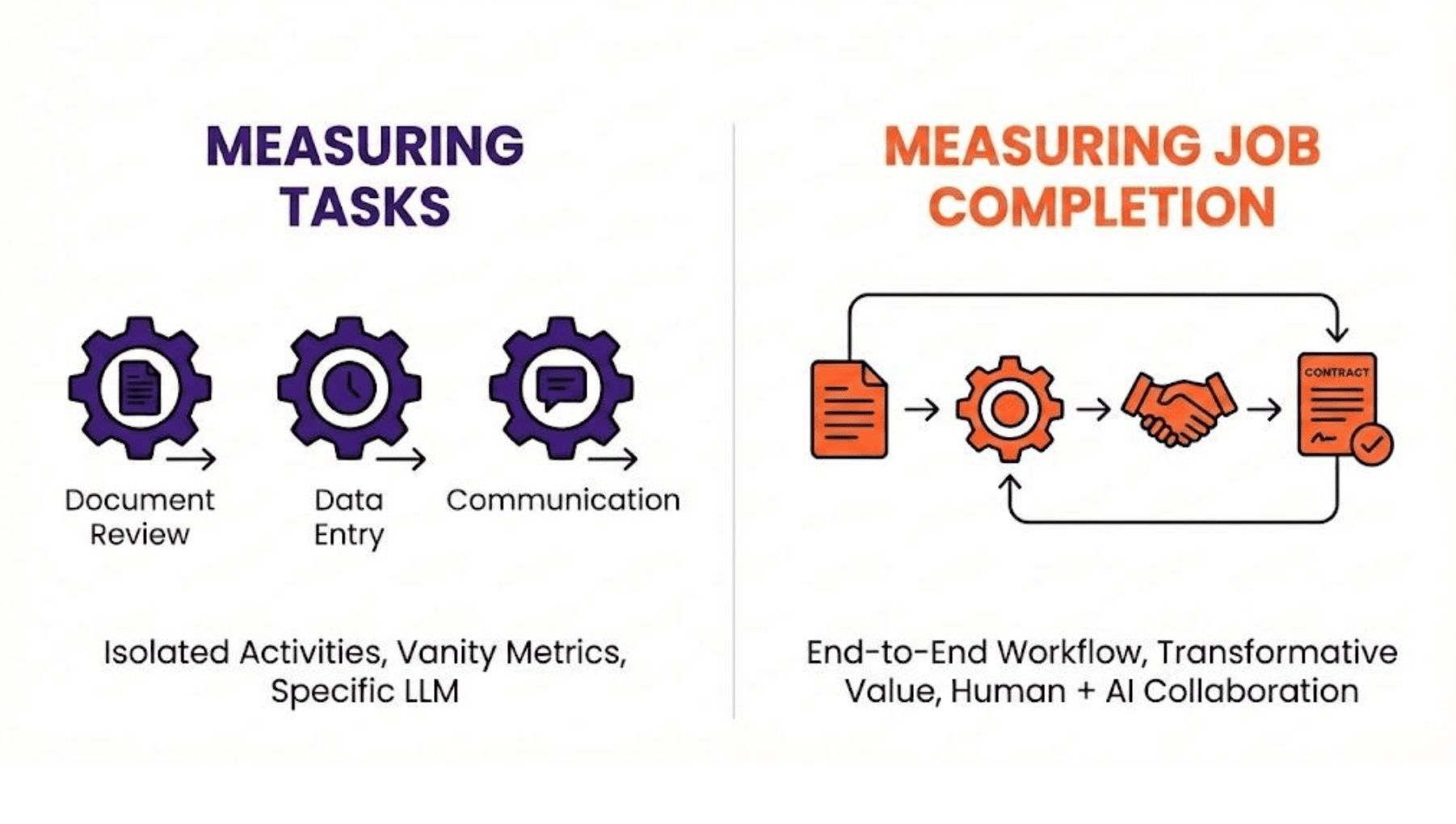

Phase 3: Measuring Tasks vs Job Completion

When we think about AI efficacy the default motion is to evaluate a specific LLM’s performance on task-based benchmarks. While this is helpful to get a general sense of the increasing capability of the models, too often these are vanity metrics.

To implement at scale and to achieve larger transformative ambitions, we need to start thinking about and measuring “end-to-end outcomes,” those produced by one or more agents collaborating together or in concert with humans. From there, we can set targets such as a 30-50% reduction in human time spent on the entire job of agreement issuance to 30 sites for a study startup— not any individual task. That’s your proof point for expanding to other workflows.

Questions to Ask Vendors

- What’s your agentic roadmap? When does AI move from task assistance to job execution?

- How do you surface the reasoning? Can I see why the agent made each decision?

- What’s the checkpoint model? Where do humans validate before the process continues?

- How do you handle exceptions? What happens when the agent hits a decision it can’t make?

Building Toward Trust

By the end of 2026, organizations will separate into two groups.

AI-Ready: Have pre-invested in governance, workflow documentation, and team capability. As agentic tools mature, they’re positioned to adopt.

AI-Waiting: Held back for the “right” solution. When the shift accelerates, they face a capability gap that’s hard to close quickly. The foundation work takes time. You can’t cram it.

The transition to agentic workflows isn’t a technology upgrade. It’s a trust upgrade — trust in your governance, trust in your workflows, trust in your people to operate at a higher level.

What We’re Not Saying

We’re not declaring human work obsolete. We’re identifying specific, high-burden workflows where the risk/reward equation is shifting — and preparing for that shift deliberately rather than reactively.

We’re not promising fully autonomous AI systems in 2026. We’re describing a trajectory where AI takes on more of the work between human checkpoints, and building the foundation to participate in that trajectory.

We’re not saying every organization needs to rush toward agentic adoption. We’re saying every organization needs to understand where this is heading and make deliberate choices about how to prepare.

The Future Worth Building

The future isn’t AI replacing humans. It’s humans and AI working together in new configurations — with humans elevated to design, oversight, and judgment

.The routine work gets done faster and more consistently. The interesting work gets more attention. The impossible-to-staff work becomes possible.

That’s a future worth building toward.

This concludes the three-part series. Essay 1 built fluency — getting yourself and your team ready. Essay 2 targeted the work — focusing AI on administrative burden where the payoff is highest. Essay 3 prepared the organization — governance, roles, and the foundation for what’s next. Together, they offer a roadmap for clinical operations leaders navigating the shift ahead.

Jim Wagner is CEO of The Contract Network, where AI is used responsibly under enterprise-grade security controls to help research sites and sponsors optimize clinical trial agreements and budgets. TCN’s AI implementation follows CHAI principles, maintains SOC 2 Type II compliance, and prohibits model training on customer data.